Measuring Front-end Performance With Real Users

When we published our last performance update, we got a comment about the lack of RUM data for front-end performance from Steve Souders. Steve followed up his comment with a blog post, stating that real users typically experience load times that are twice as slow as your synthetic measurements. We wanted to test this theory, and share some of our full page load time data from real users as well. To gather our real user data we turned to two sources: LogNormal and the Google Analytics Site Speed Report. Before we put up the data, there are a few caveats to make:

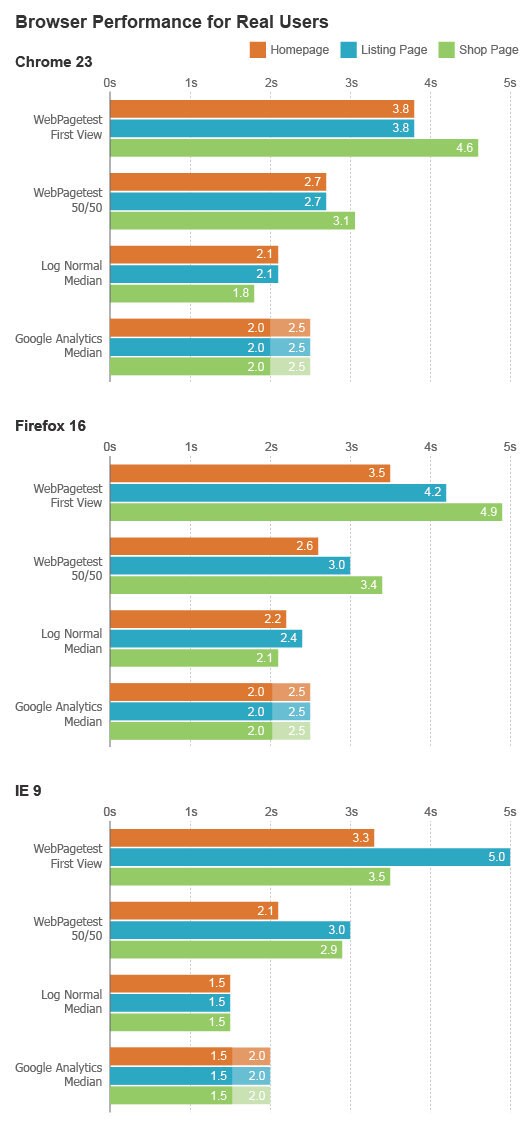

- For the day in question (11/14/12) we are providing data for the top three browsers that our customers use, all of which support the Navigation Timing API. This gives us the most accurate RUM data we can get, but introduces a small bias. This sample encompassed 43% of our customers on this day.

- This isn’t completely apples to apples, since Google Analytics (GA) uses average load time by default and WebPagetest/LogNormal use median load time. The problem with averages has been well documented, so it’s a shame that GA still gives us averages only. To get rough median numbers from GA we used the technique described in this post. This results in the range that you will see on the chart below.

- The WebPagetest numbers are for logged out users, and we don't have signed in vs signed out data from LogNormal or Google Analytics on that day, so those numbers cover all users (both logged-in and logged-out). We expect numbers for logged-out users to be slightly faster, since there is less logic to do on the backend and there are some missing UI elements on the front-end in some cases.

- The WebPagetest 50/50 numbers are calculated by taking the average of the empty cache and full cache WebPagetest measurements (more on that below).

With those points out of the way, here is the data:

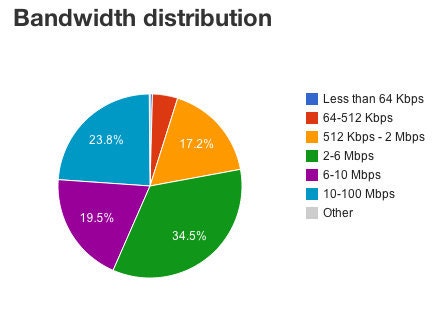

So what’s going on here? Our RUM data is faster than our synthetic data in all cases, and in all cases except for one (Shop pages in Chrome 23) our two RUM sources agree. Let's see if we can explain the difference in our findings from Steve's. According to Google Analytics, 72% of our visitors are repeat visitors, which probably means that their cache is at least partly full. Since cache is king when it comes to performance, this gives real users a huge advantage performance wise over a synthetic test with an empty cache. In addition, around 60% of our visits are from signed-in users, who likely visit a lot of the same URLs (their shop page, profile page, their listings) which means that their cache hit rate will be even higher. We tried to account for this with the WebPagetest 50/50 numbers, but it's possible that the hit rate of our customers is higher than that (this is on our list of things to test). Also, the WebPagetest requests were using a DSL connection (1.5 Mbps/384 Kbps, with 50ms round trip latency), and our users tend to have significantly more bandwidth than that:

It's encouraging to see that LogNormal and Google Analytics agree so closely, although GA provides a wide range of possible medians, so we can't be 100% confident about assessment. The one anomaly there is Shop pages in Chrome 23, and we don't have a great explanation for this discrepancy. Sample size is fairly similar (GA has 38K samples to LogNormal's 60K), and the numbers for logged-in vs. logged-out numbers are the same in LogNormal, so it's not related to that. The histogram in LogNormal looks pretty clean, and the margin of error is only 56ms. GA and LogNormal do use separate sampling mechanisms, so there could be a bias in one of them that causes this difference. Luckily it isn't large enough to worry too much about. It’s worth pointing out that when we start looking at higher percentiles in our real user monitoring things start to degrade pretty quickly. The 95th percentile load time as reported in LogNormal for Chrome 23 is 8.9 seconds - not exactly fast (in Google Analytics the 95th percentile falls into the 7-9 seconds bucket). Once you get out this far you are essentially monitoring the performance of last mile internet connectivity, which is typically well beyond your control (unless you can build fiber to your customers' doorsteps).

Overall we are showing different results than what Steve predicted, but we think this can be largely explained by our huge percentage of repeat visitors, and by the fact that we are using a DSL connection for our synthetic tests. The takeaway message here is that having more data is always a good thing, and it's important to look at both synthetic and RUM data when monitoring performance. We will be sure to post both sets of data in our next update.