The Product Hacking Ecosystem

Most product ideas are shitty, yet we spend the majority of our lives working on them.

As a product hacker, you’ll be working on a constant stream of ideas that excite you to the point of obsession; staying up late writing code, thinking about it every waking and non-waking minute. We’ve all admitted that a minority of our ideas will turn into something that will have the impact we dream of, but we don’t let that truth prevent us from being excited that this next thing might be the one. Some have admitted this and accepted that they’re a junky who’s only going to get that fix from a great feature once in a long while. Although I admit that I’m a junky, I haven’t yet become a fatalist.

Web Operations people speak about measuring their work by the Mean Time Between Failures (MTBF). For product hackers, we should be thinking in terms of minimizing Mean Time Between Wins (MTBW). Because it’s difficult to know which ideas are going to blossom into that great feature, a nice proxy for MTBW is Mean Time to Bad Idea Detection (MTTBID).

By building out an ecosystem for you and your team that allows bad ideas to be detected quickly, you can spend your time iterating on the great ideas and shipping your wins quickly while the shitty ideas die a meaningless death somewhere in a pile of other shitty ideas.

The best hackers I know are impatient. As soon as you get an exciting result, you’re going to be talking about it with whoever will listen. An ecosystem of tools that are just there providing a source of truth that everyone can understand and agree with is like having a posse of hardened thugs at your back at all times. Instead of excitement going sour when people who haven’t seen the light are doubting you, you can all agree on whats actually going on. If the numbers you care about are getting better, then great. If your product isn’t something that can be measured easily, or is a long term bet, you can show that the numbers you care about aren’t getting worse and show that its safe to push on into the wilderness.

Here are some things we’ve learned about how to build that ecosystem.

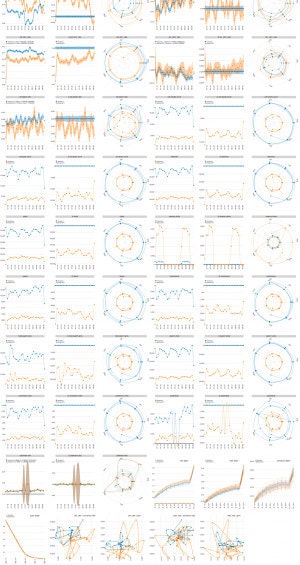

Make Tools for Failing Fast

Ideas can fail at any level of scrutiny. Some ideas don’t pan out when looked at under a microscope. Others don’t work out when talking about it over a drink. If it survives to the point of being shown to users, it can fail when you’re looking at it through a telescope and you’re just not seeing the response you hoped for. We spent some time trying to improve the quality and performance of our relevance sorting algorithm for search results before we made relevance-ordering the site-wide default. During the four month period where we did this work, we were able to get thirty experiments completed. Of those, eleven were real wins that made it into the final product.

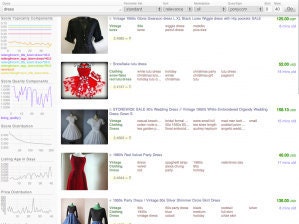

At Etsy, the birth of every idea is the simplest possible implementation that permits experimentation. To give ourselves immediate feedback on the effects of search algorithm changes we created a tool that let us see the new ranking and all of the information we need to understand why a listing is ranked the way it is. The tool let us see this new ranking the moment our search server finished compiling, allowing for rapid iteration on tricky edge-cases, and the ability to quickly detect and kill bad components.

We created a tool that runs a sample of popular and long-tail queries through a new algorithm and displays as much information as can be determined without real people being involved; an estimated percent of changed search results over the universe of all queries, a list of the most strongly affected queries, a list of the most strongly affected Etsy shops, etc..

We created tooling for running side-by-side studies where real users were asked to rate which set of search results they preferred for a given query. When a feature was ready to be launched as an A-B test, we were able to see a set of visualizations explaining how our change was performing relative to the standard algorithm.

| What A Search A/B Test Looks Like | What A Site Wide A/B Test Looks Like |

|---|---|

|

|

The best part is that we don’t think about these tools while building new products and running experiments. We come up with ideas, implement them, and if they do well we ship them. Our conversations are about the product, the code we write is for the product and our shitty ideas are executed on the spot and sloppily buried in shallow graves, as they deserve and as is our wont.

Make Tools that Make Process Disappear

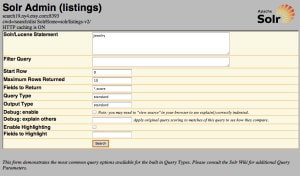

Edward Tufte introduced the concept of “chart junk”; the distracting stuff on a visualization that isn’t saying anything about the data. Marshall McLuhan made a compelling case that “The medium is the message” implying that the vehicle through which you perceive something impacts your understanding of it. Just because your paying clients won’t see your internal tooling doesn’t give you license to slap together an ill considered tool. The medium is the message, and your tools are your medium. Working Memory is limited and people are busy. Decisions are worse when getting the answer to a question about your product requires that you lose track of what you asked or why it’s important. Decisions are even worse if you never get a chance to ask questions and get answers. Products designed with fewer poor decisions are less shitty than products designed with more poor decisions. GNU wouldn’t exist without GDB.|

| Our Non-Shitty Search Query Analysis Tool | Solr's Shitty Query Analysis Tool |

|---|---|

|

|

It’s really important to our business that we return great results when people are doing searches on Etsy. It turns out we’re super lazy and if there are any barriers in the way of us asking “why is this item showing up for this query”, we’re just not going to ask the question and it’s not going to get fixed. Our query analysis tool (pictured on the left) helps reduce that barrier to getting an answer.

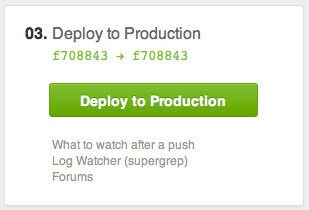

The best information about your product is going to come from real users. Unfortunately, its often painful to get your products out in to the real world. Having completed an iteration of a product, you’re filled with excitement and fear. You’re hoping you got it all right, but if you didn’t, you’re ready to fix it because you know every intimate detail of your new creation. This state of excitement and readiness is the last thing you want to let go of. Continuous deployment, the practice of pushing your code live the moment its ready, is absolutely essential for product hackers.

If you need to wait any non-trivial amount of time between completing something and seeing how well it's performing, you’re not going to be working on that project by the time you get your answer. When you do get your answer, you’re not only going to have to refresh your memory on what you had been working on, but you’re going to have to do the same on whatever else you had started working on. Asking your team to work with patience and discipline has never worked and never will work. Build an ecosystem where doing the right thing is the easiest thing. Build an ecosystem where making great decisions is the easiest thing. Build an ecosystem where the lazy, excitable and impatient really shine.